3 Critical Considerations for Future Liquid Cooling Deployments

3 Critical Considerations for Future Liquid Cooling Deployments

Ben Sutton of CoolIT Systems, explains how to engineer server, rack and facility level solutions to stay ahead of rising cooling requirements.

- Server Level: Heat Capture

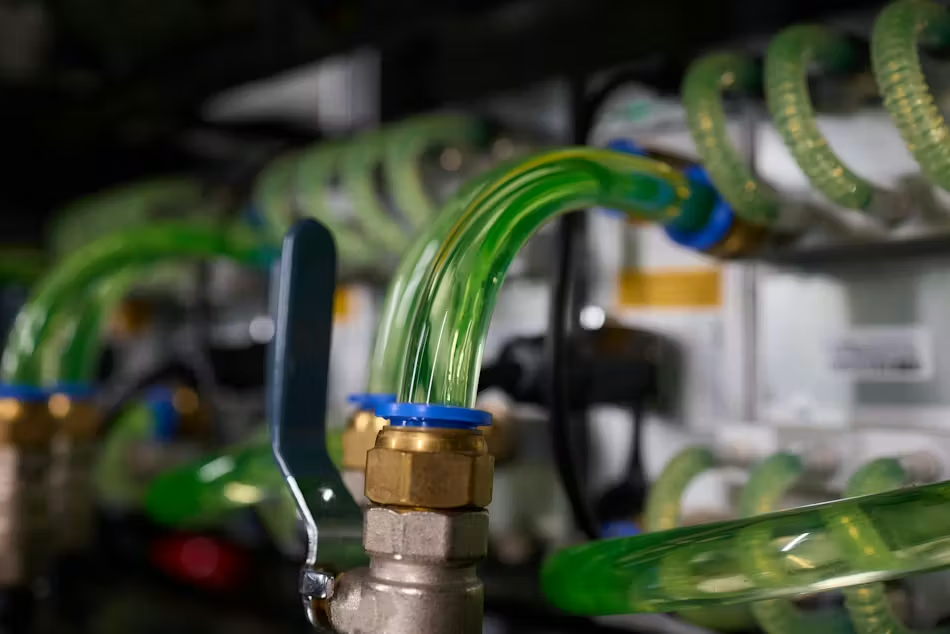

AI accelerators – GPUs and specialized ASICs – are driving the transition to liquid cooling. Managing heat flux and thermal design power (TDP) of these accelerators is a key part of server design. In 2023, AI accelerators’ heat flux values exceeded 50W/cm² and TDPs exceeded 500W. Both proved to be factors that required liquid cooling. Today TDP is well above 1kW per chip and, while heat density is not rising quite as fast, it is still on the rise.

Peripheral components are, also, becoming a thermal challenge. As rack densities rise, the typical hybrid configurations with coldplates on the processors and air cooling for the peripheral components is becoming less feasible.

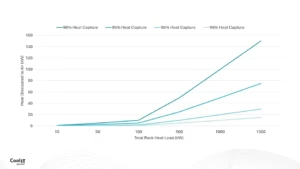

The graph below shows that if a 500kW has 90% of the heat captured by the coldplates, there still remains 50kW to dissipate from other components. This is a stretch for even the best air-cooling setups. Coldplates are evolving to capture heat from all components, not just the processor.

To keep pace the industry is now targeting >95% heat capture through liquid. It is expected that more designs will emerge resembling the full-heat capture coldplate loop illustrated below. CoolIT has long been producing near 100% heat capture direct liquid cooled coldplate designs for several generations of supercomputer systems.

- Rack & Row Level: Flow Rates

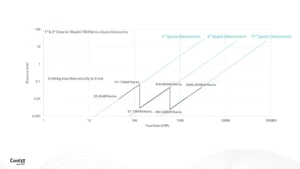

Liquid cooling infrastructure must scale with AI. That means planning for larger pipe diameters and centralized CDUs. Flow rates of 1.0–1.5 LPM/kW are becoming the industry standard. This will drive the need for larger, lower pressure drop quick disconnects. Initially this will drive a move from 1” to 2” and soon enough it will push beyond that. Rack-level connectors must be sized to handle future flow demands while minimizing pressure drop.

As the industry moves towards these larger skidded CDUs, pipe diameters will also rise. This is a key consideration for data center build outs as larger diameter piping will provide future proofing for data centers. The image below shows how this pipe sizing could evolve.

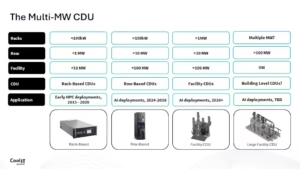

- Facility Level: Multi-MW CDUs

CDUs are evolving rapidly. What began as rack-based units (<100kW) has scaled to row-based MW units, like CoolIT’s CHx2000. These are more than sufficient for the deployments today but moving towards the future units are likely to become larger facility level units, that will be moved outside the white space and will be deployed similar to how power infrastructure is.

System design must prioritize redundancy to maintain uptime, and it is likely that this will be managed at the system or facility level. CDUs must maintain performance through transient events and respond quicky to changing AI workloads. Both the flow and pressure from the CDU must be maximized in order to future proof infrastructure.